Last time we had a brief encounter with the island of two truths, invented by Karin Cvetko-Vah. See her posts:

On this island, false statements have truth-value $0$ (as usual), but non-false statements are not necessarily true, but can be given either truth-value $Q$ (statements which the Queen on the island prefers) or $K$ (preferred by the King).

Think of the island as Trump’s paradise where nobody is ever able to say: “Look, alternative truths are not truths. They’re falsehoods.”

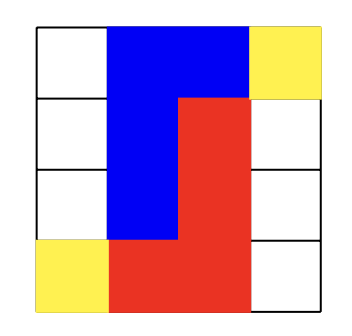

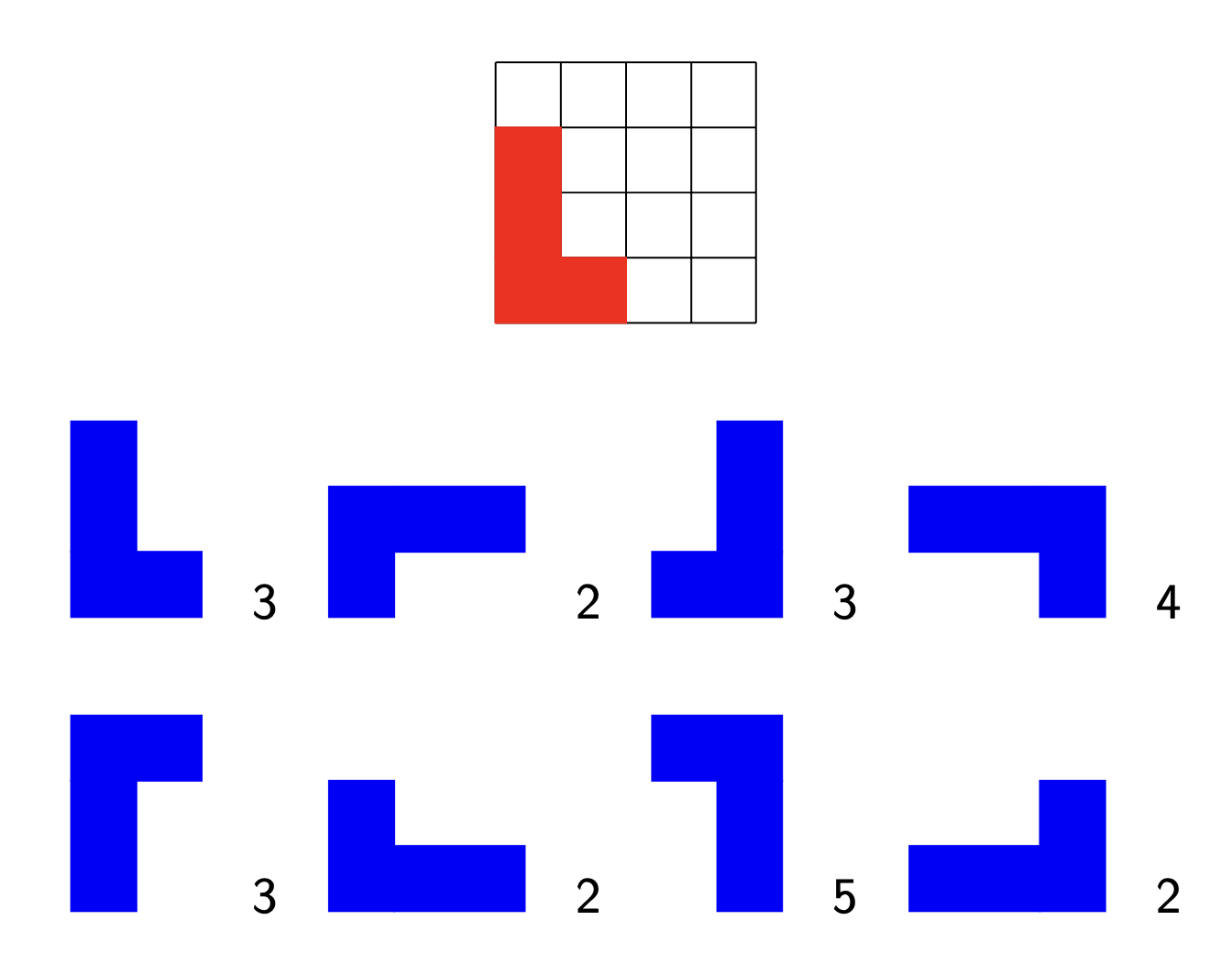

Even the presence of just one ‘alternative truth’ has dramatic consequences on the rationality of your reasoning. If we know the truth-values of specific sentences, we can determine the truth-value of more complex sentences in which we use logical connectives such as $\vee$ (or), $\wedge$ (and), $\neg$ (not), and $\implies$ (then) via these truth tables:

\[

\begin{array}{c|ccc}

\downarrow~\bf{\wedge}~\rightarrow & 0 & Q & K \\

\hline

0 & 0 & 0 & 0 \\

Q & 0 & Q & Q \\

K & 0 & K & K

\end{array} \quad

\begin{array}{c|ccc}

\downarrow~\vee~\rightarrow & 0 & Q & K \\

\hline

0 & 0 & Q & K \\

Q & Q & Q & K \\

K & K & Q & K

\end{array} \]

\[

\begin{array}{c|ccc}

\downarrow~\implies~\rightarrow & 0 & Q & K \\

\hline

0 & Q & Q & K \\

Q & 0 & Q & K \\

K & 0 & Q & K

\end{array} \quad

\begin{array}{c|c}

\downarrow & \neg~\downarrow \\

\hline

0 & Q \\

Q & 0 \\

K & 0

\end{array}

\]

Note that the truth-values $Q$ and $K$ are not completely on equal footing as we have to make a choice which one of them will stand for $\neg 0$.

Common tautologies are no longer valid on this island. The best we can have are $Q$-tautologies (giving value $Q$ whatever the values of the components) or $K$-tautologies.

Here’s one $Q$-tautology (check!) : $(\neg p) \vee (\neg \neg p)$. Verify that $p \vee (\neg p)$ is neither a $Q$- nor a $K$-tautology.

Can you find any $K$-tautology at all?

Already this makes it incredibly difficult to adapt Smullyan-like Knights and Knaves puzzles to this skewed island. Last time I gave one easy example.

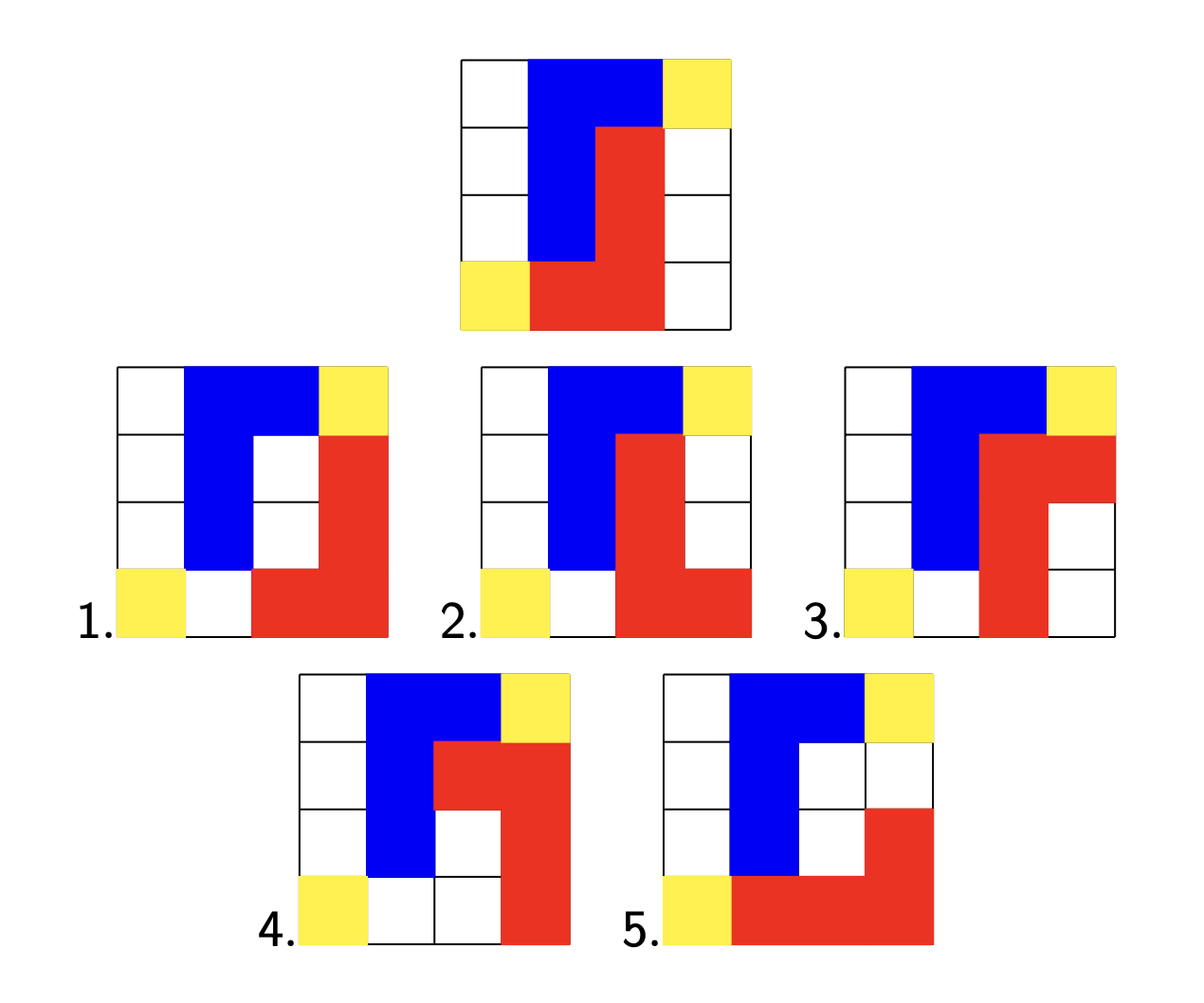

Puzzle : On an island of two truths all inhabitants are either Knaves (saying only false statements), Q-Knights (saying only $Q$-valued statements) or K-Knights (who only say $K$-valued statements).

The King came across three inhabitants, whom we will call $A$, $B$ and $C$. He asked $A$: “Are you one of my Knights?” $A$ answered, but so indistinctly that the King could not understand what he said.

He then asked $B$: “What did he say?” $B$ replies: “He said that he is a Knave.” At this point, $C$ piped up and said: “That’s not true!”

Was $C$ a Knave, a Q-Knight or a K-Knight?

Solution : Q- and K-Knights can never claim to be a Knave. Neither can Knaves because they can only say false statements. So, no inhabitant on the island can ever claim to be a Knave. So, $B$ lies and is a Knave, so his stament has truth-value $0$. $C$ claims the negation of what $B$ says so the truth-value of his statement is $\neg 0 = Q$. $C$ must be a Q-Knight.

As if this were not difficult enough, Karin likes to complicate things by letting the Queen and King assign their own truth-values to all sentences, which may coincide with their actual truth-value or not.

Clearly, these two truth-assignments follow the logic of the island of two truths for composed sentences, and we impose one additional rule: if the Queen assigns value $0$ to a statement, then so does the King, and vice versa.

I guess she wanted to set the stage for variations to the island of two truths of epistemic modal logical puzzles as in Smullyan’s book Forever Undecided (for a quick summary, have a look at Smullyan’s paper Logicians who reason about themselves).

A possible interpretation of the Queen’s truth-assignment is that she assigns value $Q$ to all statements she believes to be true, value $0$ to all statements she believes to be false, and value $K$ to all statements she has no fixed opinion on (she neither believes them to be true nor false). The King assigns value $K$ to all statements he believes to be true, $0$ to those he believes to be false, and $Q$ to those he has no fixed opinion on.

For example, if the Queen has no fixed opinion on $p$ (so she assigns value $K$ to it), then the King can either believe $p$ (if he also assigns value $K$ to it) or can have no fixed opinion on $p$ (if he assigns value $Q$ to it), but he can never believe $p$ to be false.

Puzzle : We say that Queen and King ‘agree’ on a statement $p$ if they both assign the same value to it. So, they agree on all statements one of them (and hence both) believe to be false. But there’s more:

- Show that Queen and King agree on the negation of all statements one of them believes to be false.

- Show that the King never believes the negation of whatever statement.

- Show that the Queen believes all negations of statements the King believes to be false.

Solution : If one of them believes $p$ to be false (s)he will assign value $0$ to $p$ (and so does the other), but then they both have to assign value $Q$ to $\neg p$, so they agree on this.

The value of $\neg p$ can never be $K$, so the King does not believe $\neg p$.

If the King believes $p$ to be false he assigns value $0$ to it, and so does the Queen, but then the value of $\neg p$ is $Q$ and so the Queen believes $\neg p$.

We see that the Queen and King agree on a lot of statements, they agree on all statements one of them believes to be false, and they agree on the negation of such statements!

Can you find any statement at all on which they do not agree?

Well, that may be a little bit premature. We didn’t say which sentences about the island are allowed, and what the connection (if any) is between the Queen and King’s value-assignments and the actual truth values.

For example, the Queen and King may agree on a classical ($0$ or $1$) truth-assignments to the atomic sentences for the island, and replace all $1$’s with $Q$. This will give a consistent assignment of truth-values, compatible with the island’s strange logic. (We cannot do the same trick replacing $1$’s by $K$ because $\neg 0 = Q$).

Clearly, such a system may have no relation at all with the intended meaning of these sentences on the island (the actual truth-values).

That’s why Karin Cvetko-Vah introduced the notions of ‘loyalty’ and ‘sanity’ for inhabitants of the island. That’s for next time, and perhaps then you’ll be able to answer the question whether Queen and King agree on all statements.

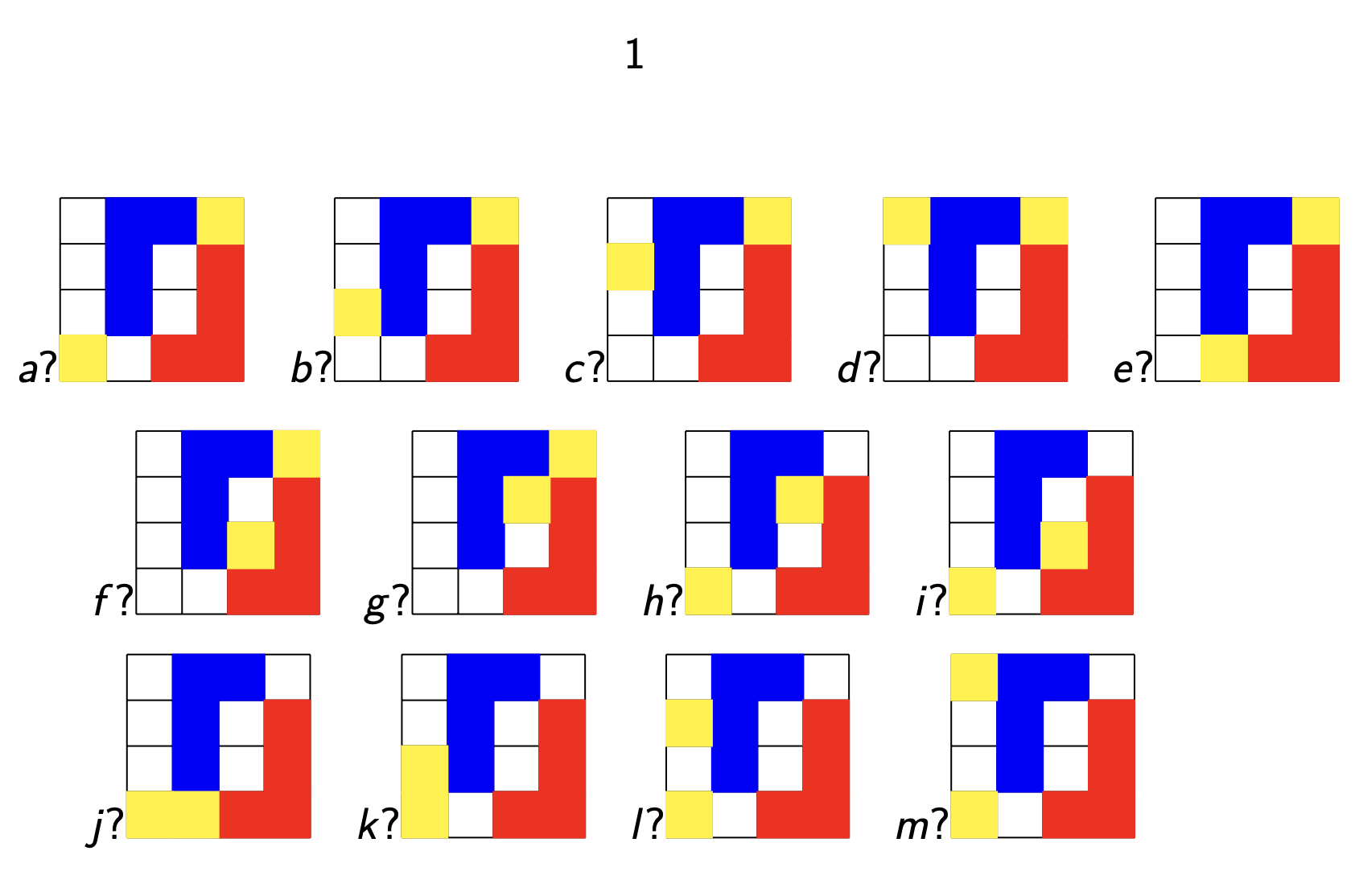

(all images in this post are from Smullyan’s book Alice in Puzzle-Land)

Leave a Comment