In the previous posts, we have depicted the ‘arithmetic line’, that is the prime numbers, as a ‘line’ and individual primes as ‘points’.

In the previous posts, we have depicted the ‘arithmetic line’, that is the prime numbers, as a ‘line’ and individual primes as ‘points’.

However, sometime in the roaring 60-ties, Barry Mazur launched the crazy idea of viewing the affine spectrum of the integers, $\mathbf{spec}(\mathbb{Z}) $, as a 3-dimensional manifold and prime numbers themselves as knots in this 3-manifold…

After a long silence, this idea was taken up recently by Mikhail Kapranov and Alexander Reznikov (1960-2003) in a talk at the MPI-Bonn in august 1996. Pieter Moree tells the story in his recollections about Alexander (Sacha) Reznikov in Sipping Tea with Sacha : “Sasha’s paper is closely related to his paper where the analogy of covers of three-manifolds and class field theory plays a big role (an analogy that was apparently first noticed by B. Mazur). Sasha and Mikhail Kapranov (at the time also at the institute) were both very interested in this analogy. Eventually, in August 1996, Kapranov and Reznikov both lectured on this (and I explained in about 10 minutes my contribution to Reznikov’s proof). I was pleased to learn some time ago that this lecture series even made it into the literature, see Morishita’s ‘On certain analogies between knots and primes’ J. reine angew. Math 550 (2002) 141-167.”

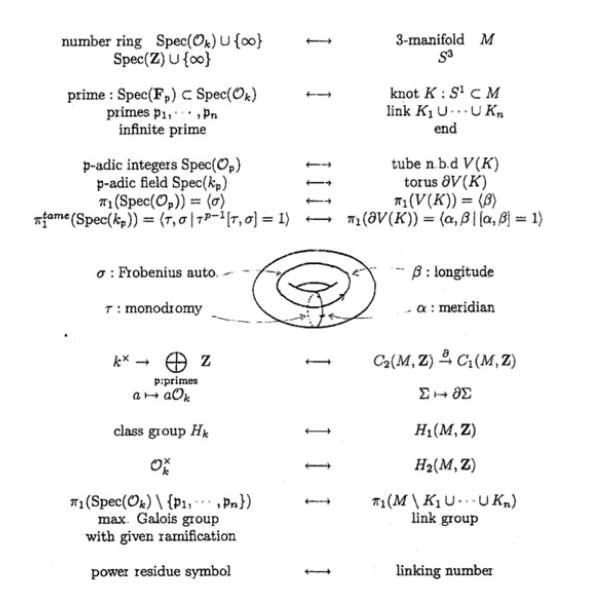

Here’s a part of what is now called the Kapranov-Reznikov-Mazur dictionary :

What is the rationale behind this dictionary? Well, it all has to do with trying to make sense of the (algebraic) fundamental group $\pi_1^{alg}(X) $ of a general scheme $X $. Recall that for a manifold $M $ there are two different ways to define its fundamental group $\pi_1(M) $ : either as the closed loops in a given basepoint upto homotopy or as the automorphism group of the universal cover $\tilde{M} $ of $M $.

For an arbitrary scheme the first definition doesn’t make sense but we can use the second one as we have a good notion of a (finite) cover : an etale morphism $Y \rightarrow X $ of the scheme $X $. As they form an inverse system, we can take their finite automorphism groups $Aut_X(Y) $ and take their projective limit along the system and call this the algebraic fundamental group $\pi^{alg}_1(X) $.

Hendrik Lenstra has written beautiful course notes on ‘Galois theory for schemes’ on all of this starting from scratch. Besides, there are also two video-lectures available on this at the MSRI-website : Etale fundamental groups 1 by H.W. Lenstra and Etale fundamental groups 2 by F. Pop.

But, what is the connection with the ‘usual’ fundamental group in case both of them can be defined? Well, by construction the algebraic fundamental group is always a profinite group and in the case of manifolds it coincides with the profinite completion of the standard fundamental group, that is,

$\pi^{alg}_1(M) \simeq \widehat{\pi_1(M)} $ (recall that the cofinite completion is the projective limit of all finite group quotients).

Right, so all we have to do to find a topological equivalent of an algebraic scheme is to compute its algebraic fundamental group and find an existing topological space of which the profinite completion of its standard fundamental group coincides with our algebraic fundamental group. An example : a prime number $p $ (as a ‘point’ in $\mathbf{spec}(\mathbb{Z}) $) is the closed subscheme $\mathbf{spec}(\mathbb{F}_p) $ corresponding to the finite field $\mathbb{F}_p = \mathbb{Z}/p\mathbb{Z} $. For any affine scheme of a field $K $, the algebraic fundamental group coincides with the absolute Galois group $Gal(\overline{K}/K) $. In the case of $\mathbb{F}_p $ we all know that this abslute Galois group is isomorphic with the profinite integers $\hat{\mathbb{Z}} $. Now, what is the first topological space coming to mind having the integers as its fundamental group? Right, the circle $S^1 $. Hence, in arithmetic topology we view prime numbers as topological circles, that is, as knots in some bigger space.

But then, what is this bigger space? That is, what is the topological equivalent of $\mathbf{spec}(\mathbb{Z}) $? For this we have to go back to Mazur’s original paper Notes on etale cohomology of number fields in which he gives an Artin-Verdier type duality theorem for the affine spectrum $X=\mathbf{spec}(D) $ of the ring of integers $D $ in a number field. More precisely, there is a non-degenerate pairing $H^r_{et}(X,F) \times Ext^{3-r}_X(F, \mathbb{G}_m) \rightarrow H^3_{et}(X,F) \simeq \mathbb{Q}/\mathbb{Z} $ for any constructible abelian sheaf $F $. This may not tell you much, but it is a ‘sort of’ Poincare-duality result one would have for a compact three dimensional manifold.

Ok, so in particular $\mathbf{spec}(\mathbb{Z}) $ should be thought of as a 3-dimensional compact manifold, but which one? For this we have to compute the algebraic fundamental group. Fortunately, this group is trivial as there are no (non-split) etale covers of $\mathbf{spec}(\mathbb{Z}) $, so the corresponding 3-manifold should be simple connected… but wenow know that this has to imply that the manifold must be $S^3 $, the 3-sphere! Summarizing : in arithmetic topology, prime numbers are knots in the 3-sphere!

More generally (by the same arguments) the affine spectrum $\mathbf{spec}(D) $ of a ring of integers can be thought of as corresponding to a closed oriented 3-dimensional manifold $M $ (which is a cover of $S^3 $) and a prime ideal $\mathfrak{p} \triangleleft D $ corresponds to a knot in $M $.

But then, what is an ideal $\mathfrak{a} \triangleleft D $? Well, we have unique factorization of ideals in $D $, that is, $\mathfrak{a} = \mathfrak{p}_1^{n_1} \ldots \mathfrak{p}_k^{n_k} $ and therefore $\mathfrak{a} $ corresponds to a link in $M $ of which the constituent knots are the ones corresponding to the prime ideals $\mathfrak{p}_i $.

And we can go on like this. What should be an element $w \in D $? Well, it will be an embedded surface $S \rightarrow M $, possibly with a boundary, the boundary being the link corresponding to the ideal $\mathfrak{a} = Dw $ and Seifert’s algorithm tells us how we can produce surfaces having any prescribed link as its boundary. But then, in particular, a unit $w \in D^* $ should correspond to a closed surface in $M $.

And all these analogies carry much further : for example the class group of the ring of integers $Cl(D) $ then corresponds to the torsion part $H_1(M,\mathbb{Z})_{tor} $ because principal ideals $Dw $ are trivial in the class group, just as boundaries of surfaces $\partial S $ vanish in $H_1(M,\mathbb{Z}) $. Similarly, one may identify the unit group $D^* $ with $H_2(M,\mathbb{Z}) $… and so on, and on, and on…

More links to papers on arithmetic topology can be found in John Baez’ week 257 or via here.

In the previous posts, we have depicted the ‘arithmetic line’, that is the prime numbers, as a ‘line’ and individual primes as ‘points’.

In the previous posts, we have depicted the ‘arithmetic line’, that is the prime numbers, as a ‘line’ and individual primes as ‘points’.