Wednesday, Alexander Smirnov (Steklov Institute) gave the first talk in the $\mathbb{F}_1$ world seminar. Here’s his title and abstract:

Title: The 10th Discriminant and Tensor Powers of $\mathbb{Z}$

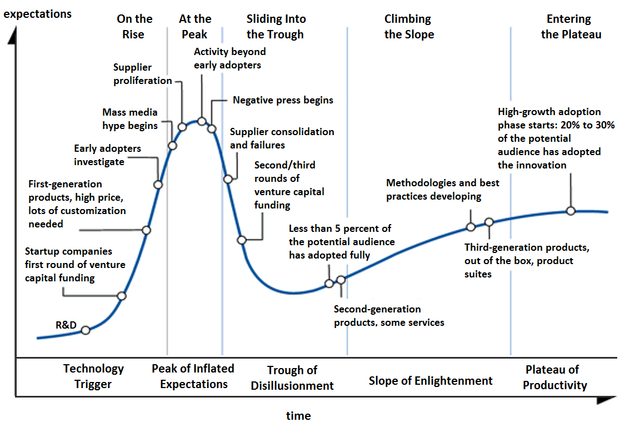

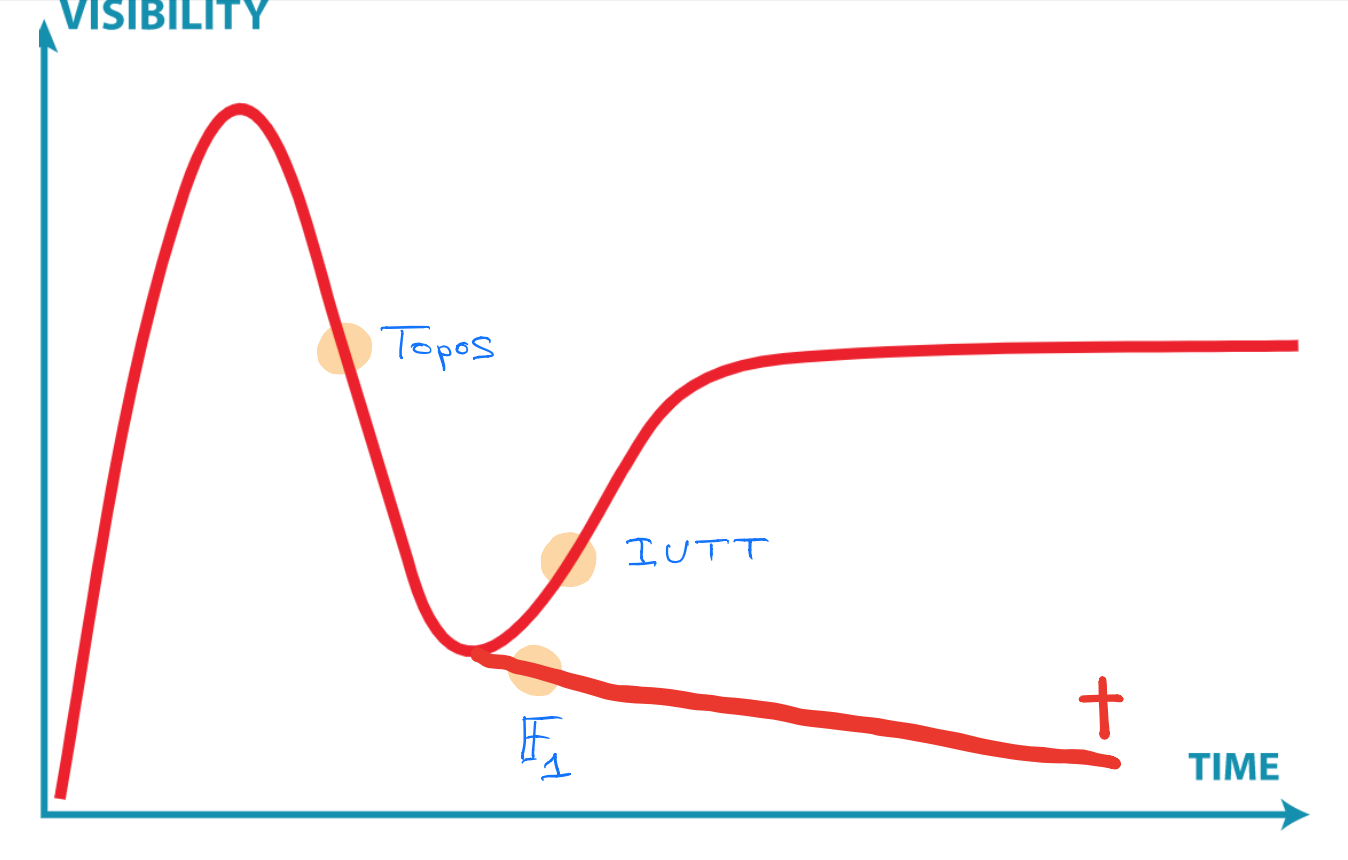

“We plan to discuss very shortly certain achievements and disappointments of the $\mathbb{F}_1$-approach. In addition, we will consider a possibility to apply noncommutative tensor powers of $\mathbb{Z}$ to the Riemann Hypothesis.”

Here’s his talk, and part of the comments section:

Smirnov urged us to pay attention to a 1933 result by Max Deuring in Imaginäre quadratische Zahlkörper mit der Klassenzahl 1:

“If there are infinitely many imaginary quadratic fields with class number one, then the RH follows.”

Of course, we now know that there are exactly nine such fields (whence there is no ‘tenth discriminant’ as in the title of the talk), and one can deduce anything from a false statement.

Deuring’s argument, of course, was different:

The zeta function $\zeta_{\mathbb{Q} \sqrt{-d}}(s)$ of a quadratic field $\mathbb{Q}\sqrt{-d}$, counts the number of ideals $\mathfrak{a}$ in the ring of integers of norm $n$, that is

\[

\sum_n \#(\mathfrak{a}:N(\mathfrak{a})=n) n^{-s} \]

It is equal to $\zeta(s). L(s,\chi_d)$ where $\zeta(s)$ is the usual Riemann function and $L(s,\chi_d)$ the $L$-function of the character $\chi_d(n) = (\frac{-4d}{n})$.

Now, if the class number of $\mathbb{Q}\sqrt{-d}$ is one (that is, its ring of integers is a principal ideal domain) then Deuring was able to relate $\zeta_{\mathbb{Q} \sqrt{-d}}(s)$ to $\zeta(2s)$ with an error term, depending on $d$, and if we could run $d \rightarrow \infty$ the error term vanishes.

So, if there were infinitely many imaginary quadratic fields with class number one we would have the equality

\[

\zeta(s) . \underset{\rightarrow}{lim}~L(s,\chi_d) = \zeta(2s) \]

Now, take a complex number $s \not=1$ with real part strictly greater that $\frac {1}{2}$, then $\zeta(2s) \not= 0$. But then, from the equality, it follows that $\zeta(s) \not= 0$, which is the RH.

To extend (a version of) the Deuring-argument to the $\mathbb{F}_1$-world, Smirnov wants to have many examples of commutative rings $A$ whose multiplicative monoid $A^{\times}$ is isomorphic to $\mathbb{Z}^{\times}$, the multiplicative monoid of the integers.

What properties must $A$ have?

Well, it can only have two units, it must be a unique factorisation domain, and have countably many irreducible elements. For example, $\mathbb{F}_3[x_1,\dots,x_n]$ will do!

(Note to self: contemplate the fact that all such rings share the same arithmetic site.)

Each such ring $A$ becomes a $\mathbb{Z}$-module by defining a new addition $+_{new}$ on it via

\[

a +_{new} b = \sigma^{-1}(\sigma(a) +_{\mathbb{Z}} \sigma(b)) \]

where $\sigma : A^{\times} \rightarrow \mathbb{Z}^{\times}$ is the isomorphism of multiplicative monoids, and on the right hand side we have the usual addition on $\mathbb{Z}$.

But then, any pair $(A,A’)$ of such rings will give us a module over the ring $\mathbb{Z} \boxtimes_{\mathbb{Z}^{\times}} \mathbb{Z}$.

It was not so clear to me what this ring is (if you know, please drop a comment), but I guess it must be a commutative ring having all these properties, and being a quotient of the ring $\mathbb{Z} \boxtimes_{\mathbb{F}_1} \mathbb{Z}$, the coordinate ring of the elusive arithmetic plane

\[

\mathbf{Spec}(\mathbb{Z}) \times_{\mathbf{Spec}(\mathbb{F}_1)} \mathbf{Spec}(\mathbb{Z}) \]

Smirnov’s hope is that someone can use a Deuring-type argument to prove:

“If $\mathbb{Z} \boxtimes_{\mathbb{Z}^{\times}} \mathbb{Z}$ is ‘sufficiently complicated’, then the RH follows.”

If you want to attend the seminar when it happens, please register for the seminar’s mailing list.